uptimekuma Setup

In this tutorial we're going to setup Uptime Kuma to monitor services.

In this tutorial we're going to setup Uptime Kuma to monitor services.

Install it using git and run it with docker:

apt install docker.io docker-compose -y

[ 10.8.0.2/24 ] [ home ] [/srv/uptimekuma]

→ cat docker-compose.yml

version: '3.3'

services:

uptime-kuma:

image: louislam/uptime-kuma:1

container_name: uptime-kuma

volumes:

- ./uptime-kuma-data:/app/data

ports:

- 3001:3001

restart: always

[ 10.8.0.2/24 ] [ home ] [/srv/uptimekuma]

→ docker-compose up -d

Creating network "uptimekuma_default" with the default driver

Pulling uptime-kuma (louislam/uptime-kuma:latest)...

latest: Pulling from louislam/uptime-kuma

99bf4787315b: Pull complete

61c3159279c7: Pull complete

9f0ec4d0abba: Pull complete

2ddd419399ab: Pull complete

6800c494eddd: Pull complete

a4dccef6dd1b: Pull complete

305b5054c6ba: Pull complete

acb6e4a0ab0e: Pull complete

4f4fb700ef54: Pull complete

5c24a0961ff0: Pull complete

Digest: sha256:0b55bcb83a1c46c6f08bcc0329fc6c1d86039581102ec8db896976a9d46de01d

Status: Downloaded newer image for louislam/uptime-kuma:latest

Creating uptime-kuma ... done

[ 10.8.0.2/24 ] [ home ] [/srv/uptimekuma]

→ ls

docker-compose.yml uptime-kuma-data

[ 10.8.0.2/24 ] [ home ] [/srv/uptimekuma]

→ ls -lash uptime-kuma-data

total 856K

4.0K drwxr-xr-x 3 root root 4.0K Jul 23 16:58 .

4.0K drwxr-xr-x 3 root root 4.0K Jul 23 16:58 ..

60K -rwxr-xr-x 1 root root 60K Jul 23 16:58 kuma.db

32K -rwxr-xr-x 1 root root 32K Jul 23 16:58 kuma.db-shm

752K -rwxr-xr-x 1 root root 749K Jul 23 16:58 kuma.db-wal

4.0K drwxr-xr-x 2 root root 4.0K Jul 23 16:58 upload

[ 10.8.0.2/24 ] [ home ] [/srv/uptimekuma]

→ nmap 127.0.0.1 -p 3001

Starting Nmap 7.93 ( https://nmap.org ) at 2023-07-23 16:58 CEST

Nmap scan report for localhost (127.0.0.1)

Host is up (0.00033s latency).

PORT STATE SERVICE

3001/tcp open nessus

Nmap done: 1 IP address (1 host up) scanned in 0.20 seconds

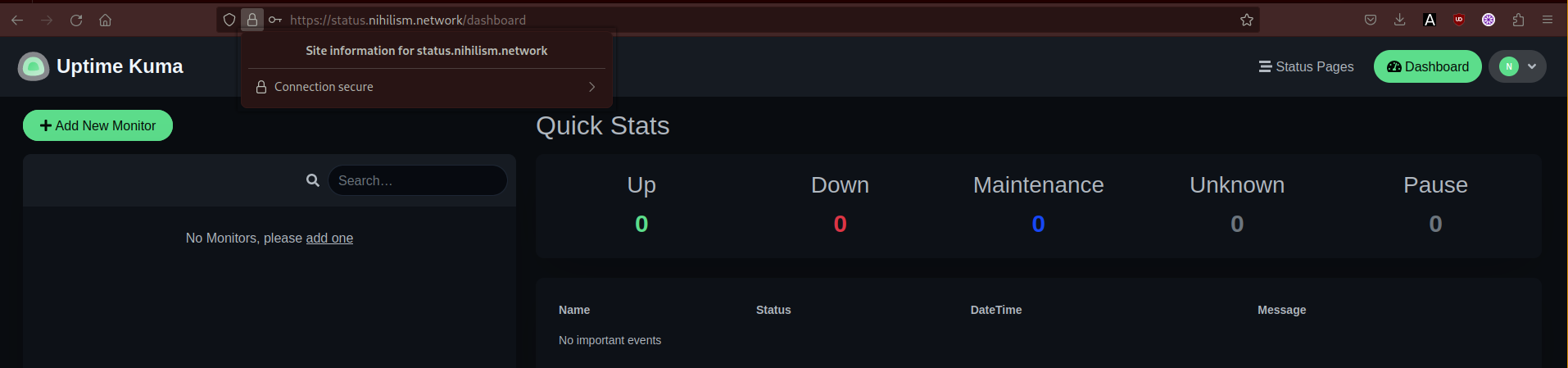

Next you can put it behind a reverse nginx proxy and use it:

[ 10.8.0.2/24 ] [ home ] [/srv/uptimekuma]

→ systemctl stop nginx

[ 10.8.0.2/24 ] [ home ] [/srv/uptimekuma]

→ acme.sh --issue --standalone -d status.nihilism.network -k 4096

[ 10.8.0.2/24 ] [ home ] [/etc/nginx/sites-available]

→ vim status.nihilism.network.conf

[ 10.8.0.2/24 ] [ home ] [/etc/nginx/sites-available]

→ cat status.nihilism.network.conf

upstream statusbackend {

server 127.0.0.1:3001;

}

server {

listen 80;

listen [::]:80;

server_name status.nihilism.network;

return 301 https://$server_name$request_uri;

}

server {

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name status.nihilism.network;

ssl_certificate /root/.acme.sh/status.nihilism.network/fullchain.cer;

ssl_trusted_certificate /root/.acme.sh/status.nihilism.network/status.nihilism.network.cer;

ssl_certificate_key /root/.acme.sh/status.nihilism.network/status.nihilism.network.key;

ssl_protocols TLSv1.3 TLSv1.2;

ssl_ciphers 'TLS13-CHACHA20-POLY1305-SHA256:TLS13-AES-256-GCM-SHA384:TLS13-AES-128-GCM-SHA256:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256';

ssl_prefer_server_ciphers on;

ssl_session_cache shared:SSL:10m;

ssl_session_timeout 10m;

ssl_session_tickets off;

ssl_ecdh_curve auto;

ssl_stapling on;

ssl_stapling_verify on;

resolver 80.67.188.188 80.67.169.40 valid=300s;

resolver_timeout 10s;

add_header X-XSS-Protection "1; mode=block"; #Cross-site scripting

add_header X-Frame-Options "SAMEORIGIN" always; #clickjacking

add_header X-Content-Type-Options nosniff; #MIME-type sniffing

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains; preload";

location / {

proxy_pass http://statusbackend;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

}

}

[ 10.8.0.2/24 ] [ home ] [/etc/nginx/sites-available]

→ ln -s /etc/nginx/sites-available/status.nihilism.network.conf /etc/nginx/sites-enabled

[ 10.8.0.2/24 ] [ home ] [/etc/nginx/sites-available]

→ nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[ 10.8.0.2/24 ] [ home ] [/srv/uptimekuma]

→ systemctl start nginx

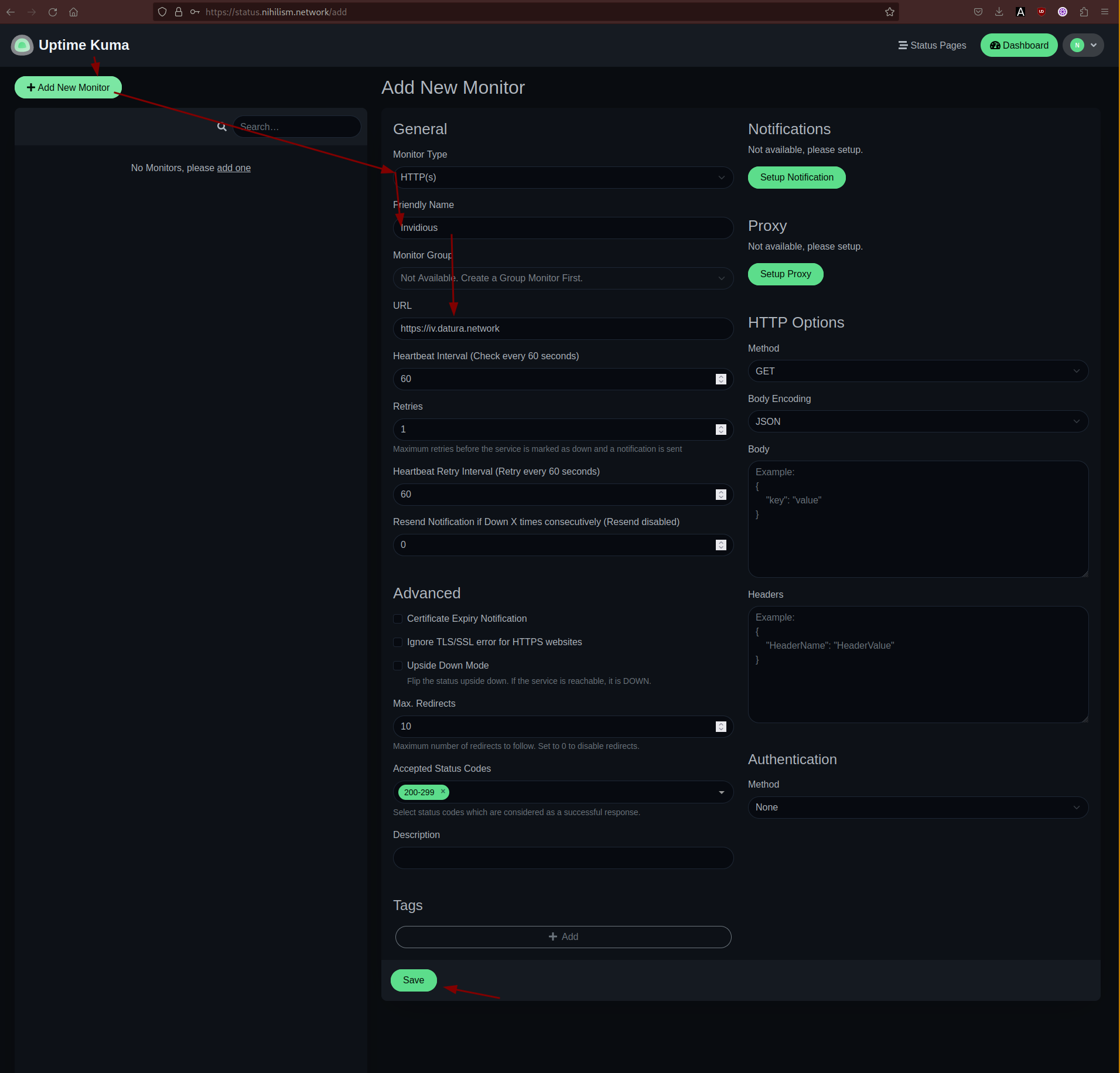

From there we're going to add hosts to check the uptime:

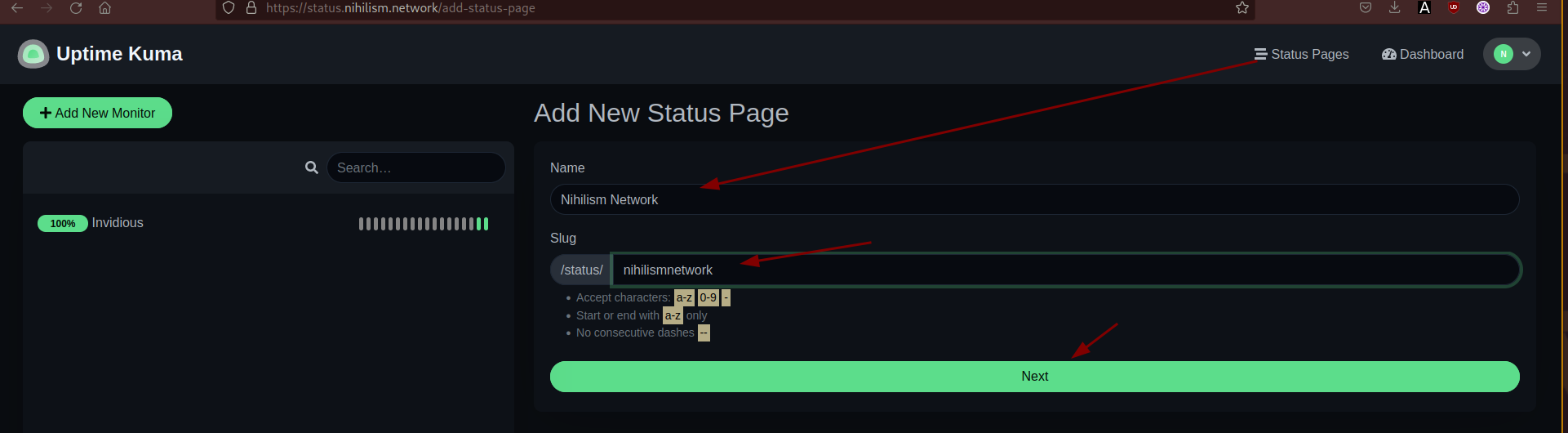

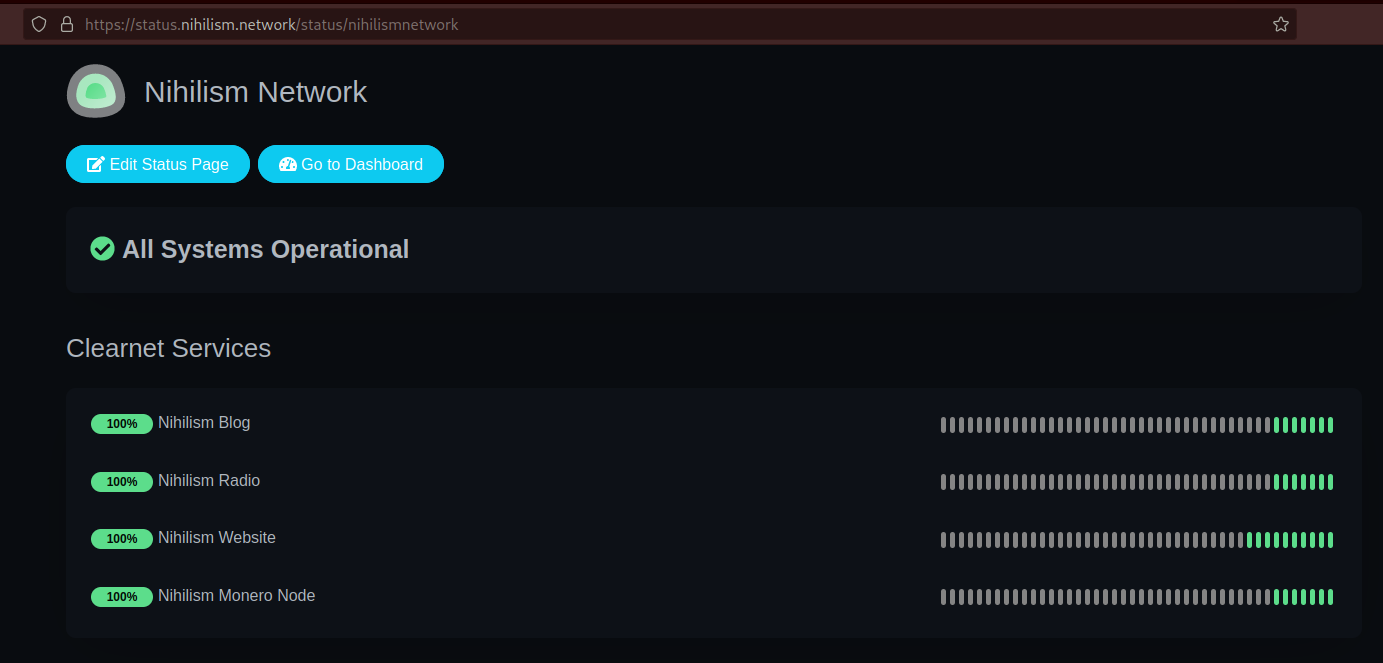

To make it more organised we'll create a status page for a group of hosts to monitor:

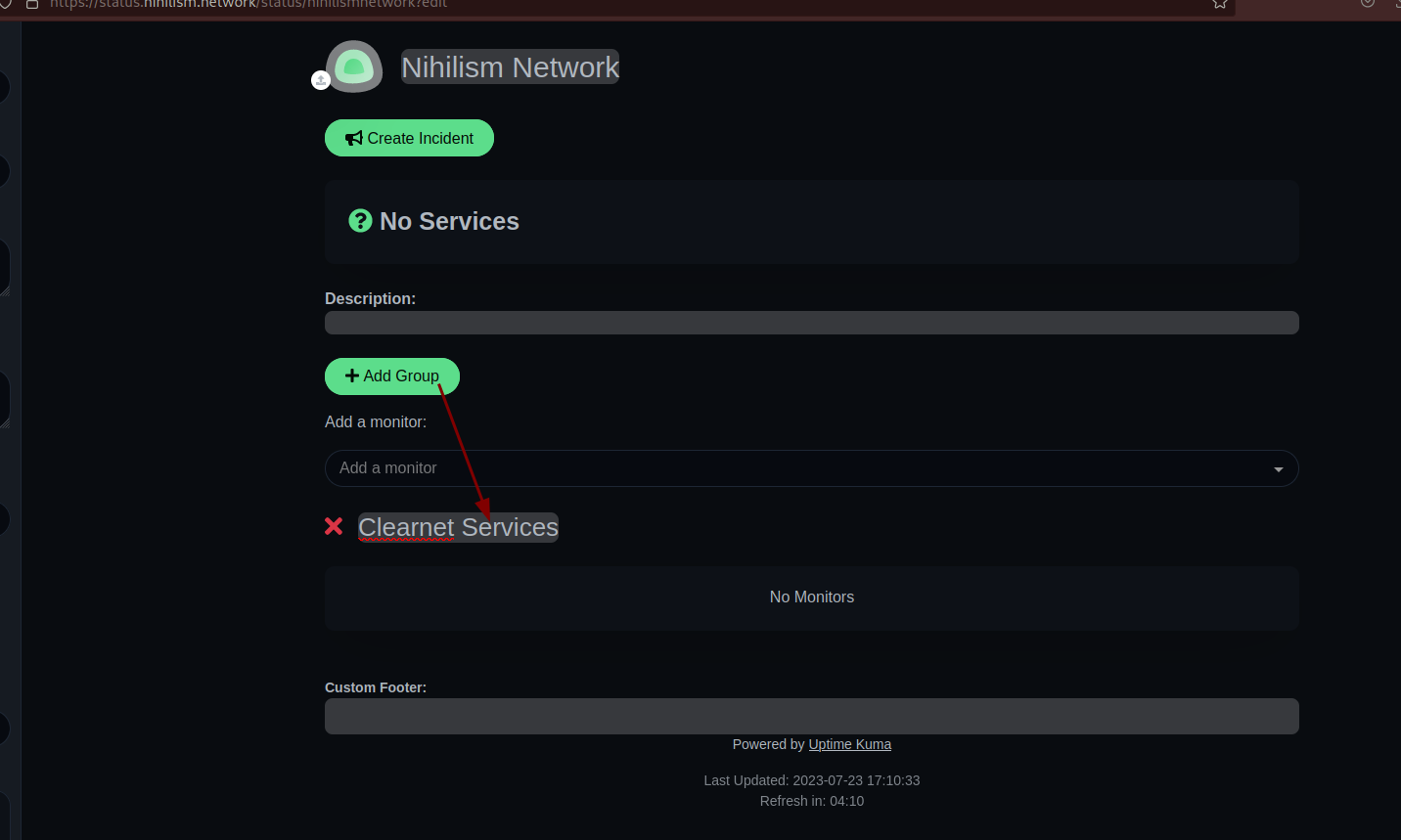

And in here we're going to add hosts to track

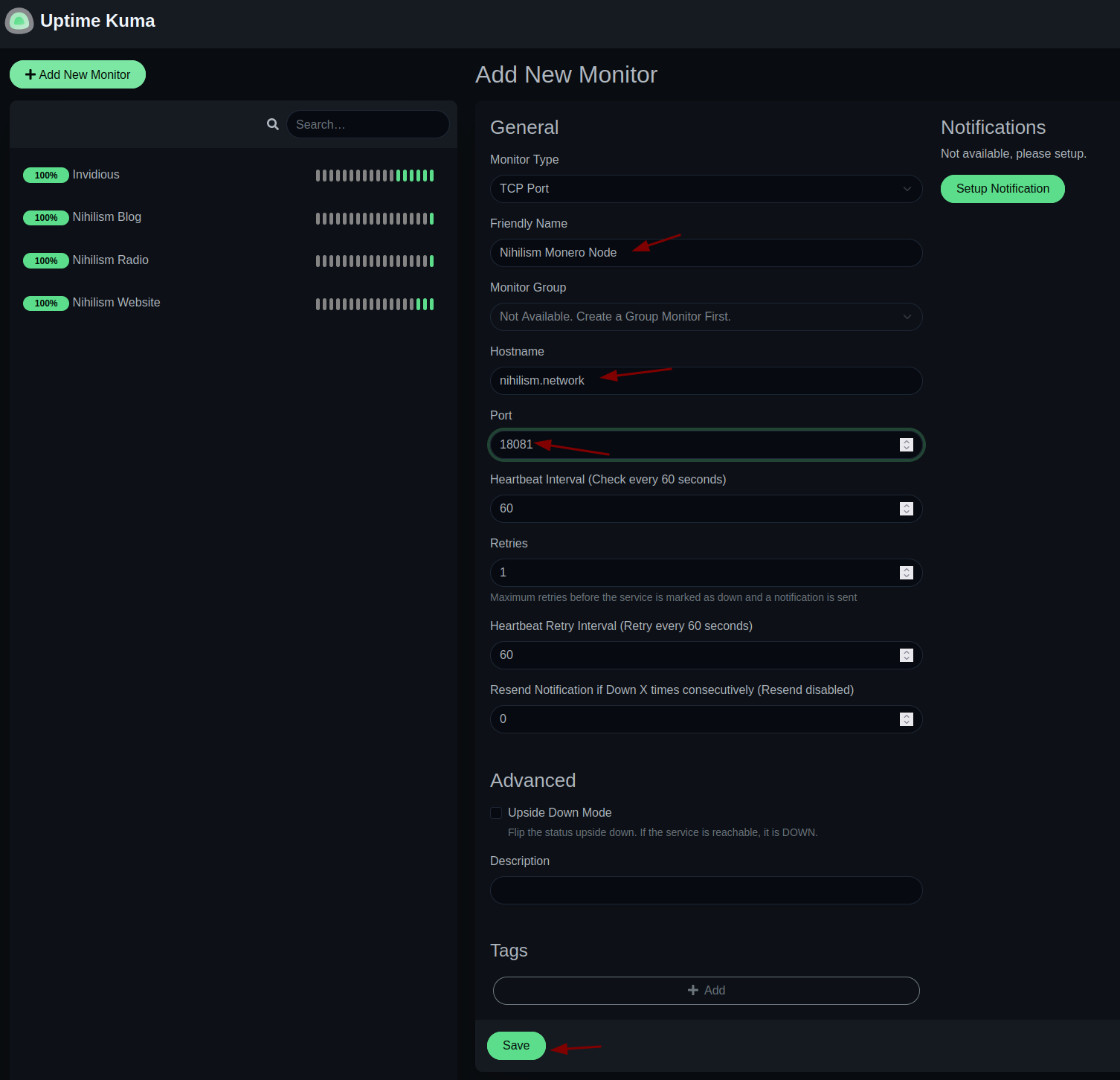

We can also add tcp-specific ports like 18081 monero node:

After populating them it looks like so:

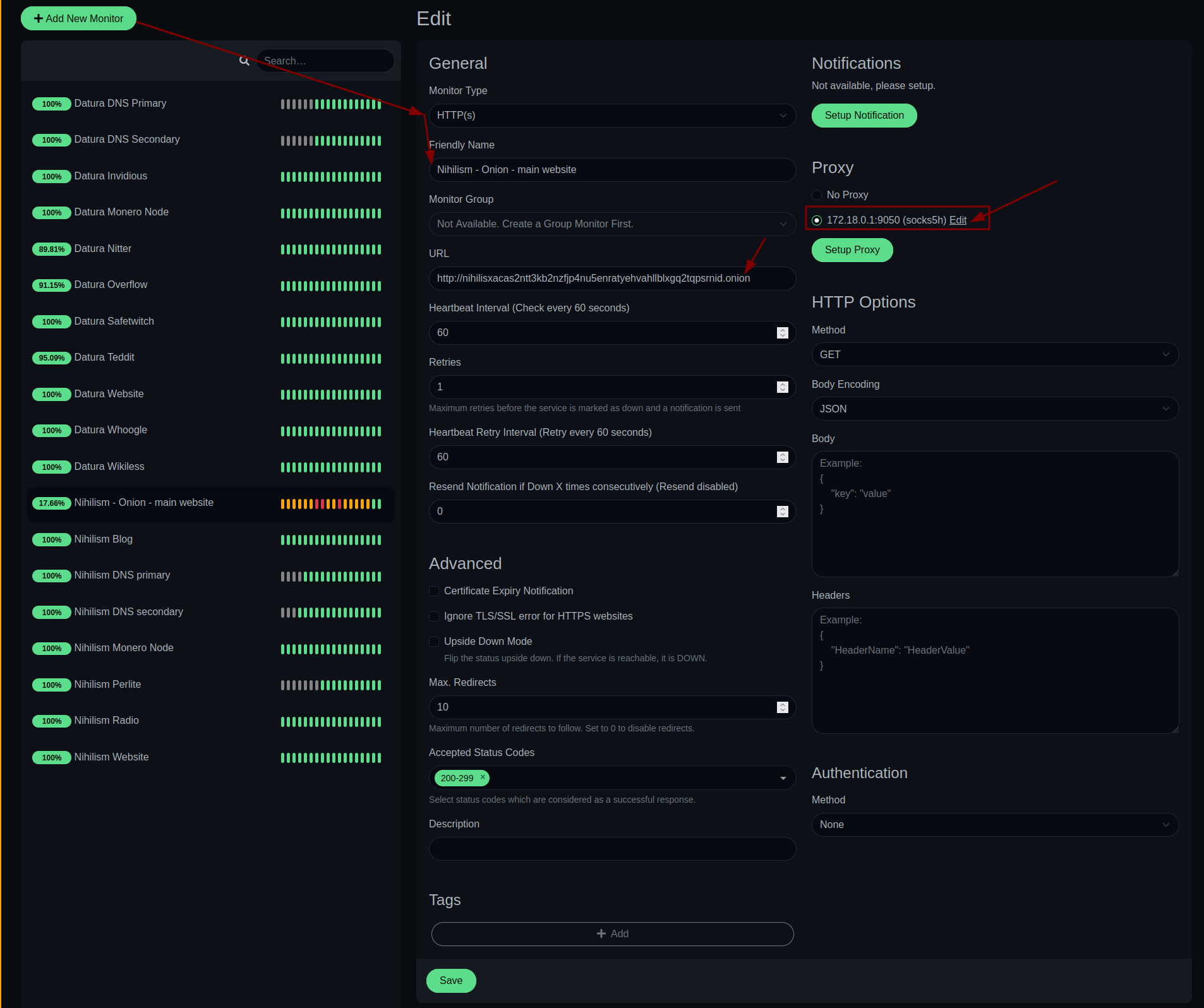

Next step: monitoring onion services:

Then you need to make sure that the docker container can access the tor socks proxy port on the host machine like so:

[ 10.8.0.2/24 ] [ home ] [~]

→ cat /etc/tor/torrc | grep 9050

SocksPort 0.0.0.0:9050

[ 10.8.0.2/24 ] [ home ] [~]

→ systemctl restart tor@default

[ 10.8.0.2/24 ] [ home ] [~]

→ systemctl status tor@default

● tor@default.service - Anonymizing overlay network for TCP

Loaded: loaded (/lib/systemd/system/tor@default.service; enabled-runtime; preset: enabled)

Active: active (running) since Sun 2023-07-23 17:38:19 CEST; 9s ago

Process: 93828 ExecStartPre=/usr/bin/install -Z -m 02755 -o debian-tor -g debian-tor -d /run/tor (code=exited, status=0/SUCCESS)

Process: 93830 ExecStartPre=/usr/bin/tor --defaults-torrc /usr/share/tor/tor-service-defaults-torrc -f /etc/tor/torrc --RunAsDaemon 0 --verify-config (code=exited, status=0/SUCCESS)

Main PID: 93831 (tor)

Tasks: 9 (limit: 9483)

Memory: 35.0M

CPU: 3.659s

CGroup: /system.slice/system-tor.slice/tor@default.service

├─93831 /usr/bin/tor --defaults-torrc /usr/share/tor/tor-service-defaults-torrc -f /etc/tor/torrc --RunAsDaemon 0

└─93832 /usr/bin/obfs4proxy

Jul 23 17:38:20 home Tor[93831]: Bootstrapped 1% (conn_pt): Connecting to pluggable transport

Jul 23 17:38:20 home Tor[93831]: Opening Control listener on /run/tor/control

Jul 23 17:38:20 home Tor[93831]: Opened Control listener connection (ready) on /run/tor/control

Jul 23 17:38:20 home Tor[93831]: Bootstrapped 2% (conn_done_pt): Connected to pluggable transport

Jul 23 17:38:20 home Tor[93831]: Bootstrapped 10% (conn_done): Connected to a relay

Jul 23 17:38:20 home Tor[93831]: Bootstrapped 14% (handshake): Handshaking with a relay

Jul 23 17:38:20 home Tor[93831]: Bootstrapped 15% (handshake_done): Handshake with a relay done

Jul 23 17:38:20 home Tor[93831]: Bootstrapped 75% (enough_dirinfo): Loaded enough directory info to build circuits

Jul 23 17:38:20 home Tor[93831]: Bootstrapped 95% (circuit_create): Establishing a Tor circuit

Jul 23 17:38:21 home Tor[93831]: Bootstrapped 100% (done): Done

[ 10.8.0.2/24 ] [ home ] [/etc/nginx/sites-available]

→ docker container list

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a77a35a135dd louislam/uptime-kuma:latest "/usr/bin/dumb-init …" 36 minutes ago Up 36 minutes (healthy) 0.0.0.0:3001->3001/tcp, :::3001->3001/tcp uptime-kuma

[ 10.8.0.2/24 ] [ home ] [/etc/nginx/sites-available]

→ docker exec -it a77 bash

root@a77a35a135dd:/app# ip a

bash: ip: command not found

root@a77a35a135dd:/app# cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.18.0.2 a77a35a135dd

[ 10.8.0.2/24 ] [ home ] [~]

→ ip a | grep inet | grep 172.18

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-81f77ed904e1

Then if you want to make your dashboards available for the public you just give them the link to your status pages like the ones below:

Datura Network Status page https://status.nihilism.network/status/daturanetwork

Nihilism Network Status page https://status.nihilism.network/status/nihilismnetwork

UPDATE NOTE: if your docker container gets updated and the database gets corrupted, you're going to need to go to dashboard > settings > backup > export backup (json file) > docker-compose down, delete the uptimekuma data floder > docker-compose up -d --force-recreate > then you need to go create the admin account again, then go to settings backup import backup (json file) and need to redo the status pages.

The reason for this is the developers do database changes from a version to the next without caring about database breaking changes. Hence need to do all this to fix an uptimekuma upgrade db failure

Until there is Nothing left.

Donate XMR: 8AUYjhQeG3D5aodJDtqG499N5jXXM71gYKD8LgSsFB9BUV1o7muLv3DXHoydRTK4SZaaUBq4EAUqpZHLrX2VZLH71Jrd9k8

Contact: nihilist@nihilism.network (PGP)